What if the key to unlocking your device’s full potential lies in the unseen world of silicon and circuits? Modern electronics rely on precision engineering at the smallest scales, where every nanometer impacts speed, efficiency, and reliability. From smartphones to supercomputers, the heart of these systems beats with carefully crafted logic and data pathways.

Over decades, advancements in computer architecture have transformed how instructions are processed. Early models focused on raw speed, but today’s challenges demand smarter resource allocation. By integrating digital logic with streamlined instruction flows, engineers achieve breakthroughs in performance without compromising power consumption.

This article explores practical steps to refine processor outcomes. You’ll learn how historical lessons shape modern techniques—like balancing clock cycles with thermal limits—and why adaptability matters in fast-paced industries. Whether you’re a student or a seasoned professional, the insights here bridge theory and real-world application.

Key Takeaways

- Processor architecture directly impacts device speed, energy use, and functionality.

- Effective integration of logic gates and data pathways ensures smoother instruction execution.

- Historical advancements inform today’s best practices for overcoming engineering limitations.

- Practical design requires balancing technical specs with real-world performance benchmarks.

- Modern optimization strategies address both computational power and thermal management.

Fundamentals of microprocessor design

Behind every efficient computing device lies a meticulously structured framework of interconnected components. These elements work in harmony to execute tasks at lightning speeds while managing power consumption. Let’s break down how these parts function together to shape system outcomes.

Understanding Basic Components

At the core of any processor are three critical units: the Arithmetic Logic Unit (ALU), registers, and control circuits. The ALU handles mathematical operations, while registers store temporary data for rapid access. Here’s how they compare:

| Component | Function | Impact on Performance |

|---|---|---|

| ALU | Executes calculations | Determines processing speed |

| Registers | Hold active data | Reduces memory latency |

| Control Unit | Directs operations | Optimizes instruction flow |

| Cache | Stores frequent data | Boosts system responsiveness |

Cache memory plays a pivotal role by anticipating data needs. Smaller than main memory, it operates closer to the processor core for faster retrieval.

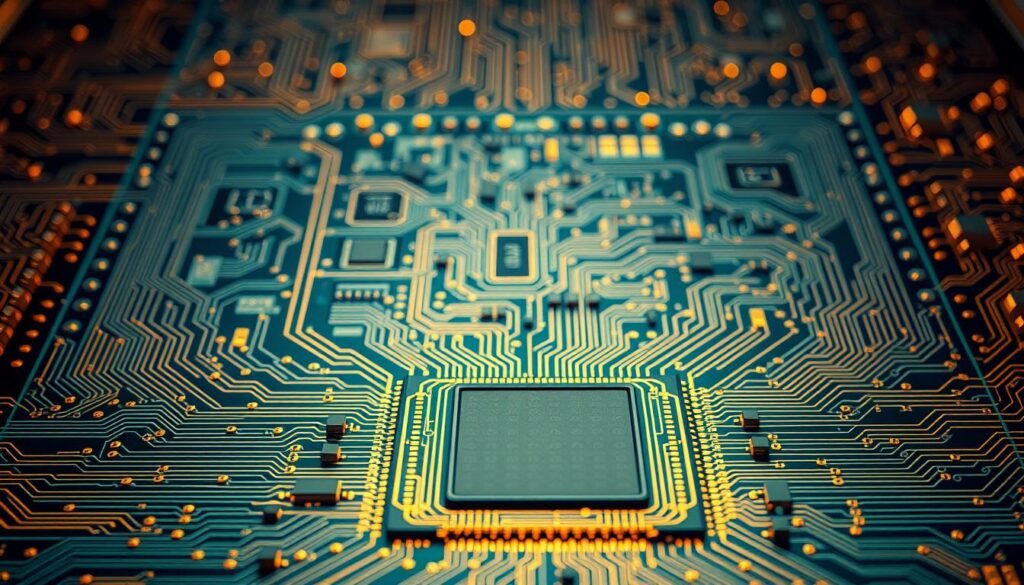

Digital Logic and Instruction Sets

Digital logic gates form the foundation of all computational tasks. These gates create pathways for binary data, translating software commands into hardware actions.

“The elegance of a processor lies in how simply it solves complex problems,”

notes a senior Intel engineer.

Instruction sets act as a bridge between software and hardware. They define how commands like “add” or “move” are executed physically. Modern architectures use hardware description languages to map these interactions, ensuring precise control over data flow.

Navigating the Microprocessor Design Process

Creating high-performance processors demands a blend of precision and adaptability. Engineers must balance theoretical frameworks with practical constraints, ensuring every decision aligns with end-user needs. This phase transforms abstract concepts into tangible systems through structured methodologies and iterative refinement.

Design Steps and Methodologies

The journey begins with defining core objectives, such as speed targets or power limits. Teams outline data pathways and design parameters early to avoid costly revisions. Finite state machines help model behavior, while simulation tools predict how circuits handle real-world tasks.

Time management proves critical during prototyping. Parallel workflows accelerate development, but synchronization remains essential to prevent bottlenecks. One automotive chip team reduced their cycle by 22% using agile validation checklists.

Developing the Instruction Set Architecture

An effective ISA acts as the processor’s linguistic foundation. Designers map commands like “load” or “compare” to binary patterns, ensuring compatibility with software ecosystems. Memory hierarchy decisions—such as cache sizes—directly influence how quickly these instructions execute.

“A well-crafted ISA turns hardware limitations into opportunities for optimization,”

Testing reveals hidden flaws before production. Techniques like formal verification confirm logic integrity, while stress simulations assess thermal resilience. These steps ensure the final product meets both technical specs and market demands.

Strategies for Achieving Peak Performance

Peak performance in computing isn’t accidental—it’s engineered through deliberate optimization strategies. Over the years, engineers have refined techniques to squeeze maximum capability from silicon-based systems while managing thermal and power constraints. Let’s explore how modern approaches balance speed, efficiency, and reliability.

Performance Benchmarks and Metrics

Critical metrics like instructions per cycle (IPC) and power efficiency guide optimization efforts. Historical data from technical books reveals how standards evolved:

| Metric | 1990s Baseline | 2020s Target | Improvement Factor |

|---|---|---|---|

| Clock Speed | 200 MHz | 5 GHz | 25x |

| Power Use | 60W | 15W | 75% reduction |

| Cache Size | 256 KB | 32 MB | 128x |

These benchmarks help students evaluate tradeoffs between speed and energy consumption during the development process.

Optimization Techniques and Best Practices

Parallel processing splits tasks across multiple cores, while cache optimization reduces data retrieval delays. A 2022 case study showed how restructuring instruction pipelines boosted smartphone responsiveness by 40%. Technical manuals emphasize using hardware description language to model control unit interactions before fabrication.

Incorporating Modern Design Paradigms

AI-driven tools now simulate thermal behavior and power distribution in real-time. One automotive team used these systems to cut validation time by 18 months. As noted in Advanced Computing Architectures, “Clear technical language in instruction sets prevents misinterpretation during manufacturing.”

These strategies enable devices to handle complex workloads while maintaining stability—a requirement for today’s data-driven applications.

Conclusion

The journey from concept to high-performing hardware demands equal parts precision and collaboration. Through exploring core components, instruction sets, and optimization strategies, we’ve seen how engineering excellence shapes modern electronics. Every decision—from register placement to thermal management—impacts real-world use.

Historical lessons guide today’s manufacturing standards. Teams that balance speed benchmarks with energy efficiency create devices ready for tomorrow’s challenges. Clear technical descriptions of data pathways ensure smooth translation from blueprints to silicon.

Success hinges on teamwork across disciplines. Electrical engineers, software developers, and production specialists must align their goals. This cooperative approach turns theoretical models into reliable products that power our connected world.

Apply these methods to your projects. Test rigorously, iterate often, and prioritize adaptable solutions. Stay curious about emerging tools like AI-driven simulation or advanced cooling techniques. The field evolves rapidly—continuous learning ensures your skills remain relevant.

Ready to push boundaries? Dive deeper into case studies, experiment with open-source architectures, and engage with industry forums. Your next breakthrough could redefine what’s possible in hardware innovation.